Reality Promises has been recognized with the Best Paper Award at ACM UIST 2025 in Busan, South Korea.

Older News

I have obtained my PhD (Dr. rer. nat) in Computer Science after submitting and defending my dissertation on Situated and Semantics-Aware Mixed Reality.

My dissertation is available below. Find some Top 3 moments of my PhD time also below. A few thoughts about my PhD time can be found here.

Academic Experience

Industry Experience

Advised by Dr. Tobias Grosse-Puppendahl and Jochen Gross, I co-founded Porsche Emerging Tech Research and made it successful within 18 months (incl. 2 first-author papers and > 15 patent filings). My work focused on context-aware in-car and around-car mixed reality systems. I hired and guided people, built the ML stack, and pushed organizational boundaries on the way.

Before my PhD, I was advised by Dr. Andrada Junge and Martin Mayer as a data science intern on vehicle quality analyics, authored my master's thesis on estimating remaining EV battery life from past usage in a fleet-learning approach, and joined as a freelance data engineer to build a parallelized terabyte-scale vehicle data processing pipeline.

Selected Publications

For a full list of publications, please refer to my Google Scholar profile.Papers

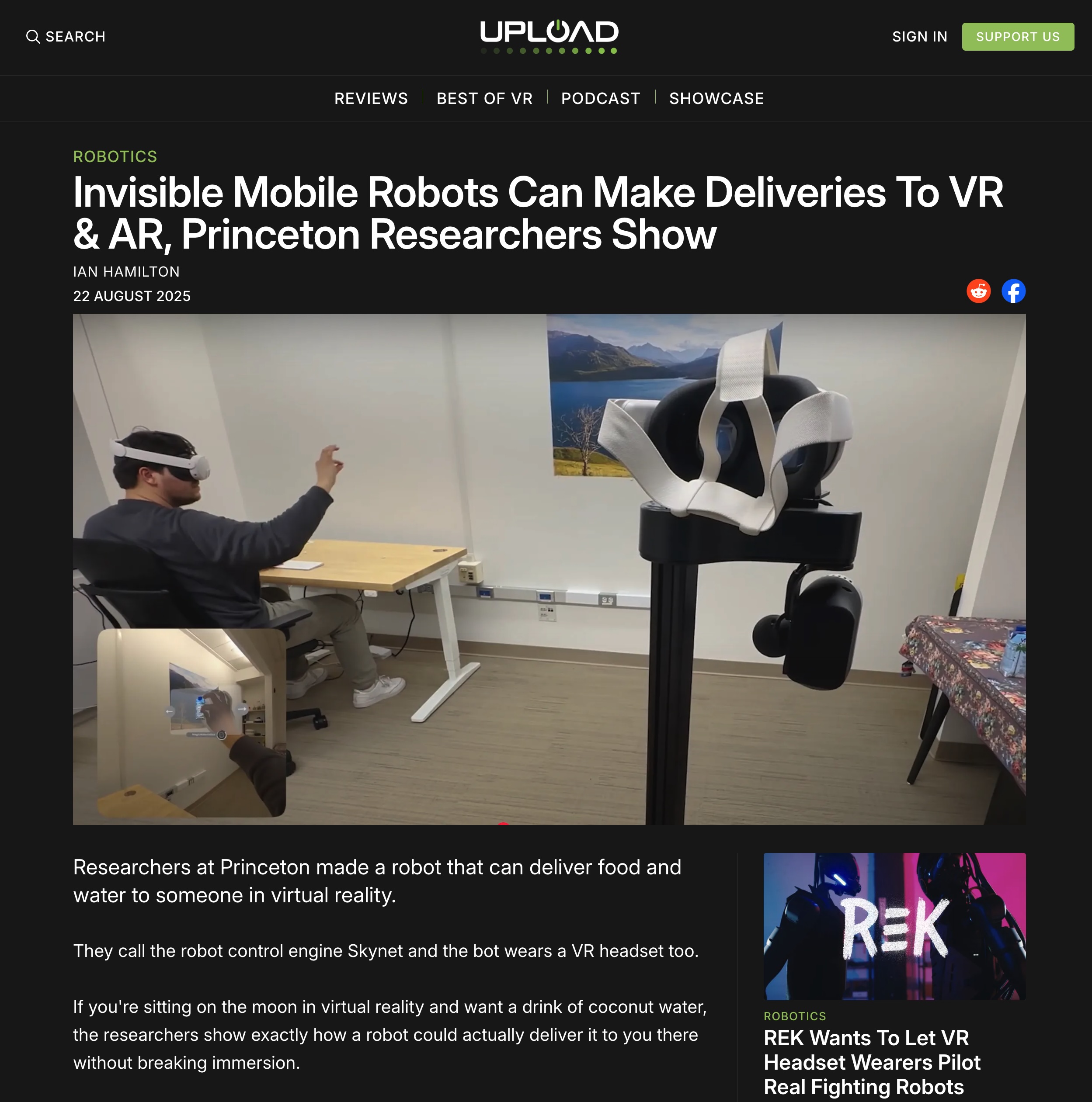

UploadVR. Original Story. Invisible Mobile Robots Can Make Deliveries To VR & AR, Princeton Researchers Show (by Ian Hamilton)

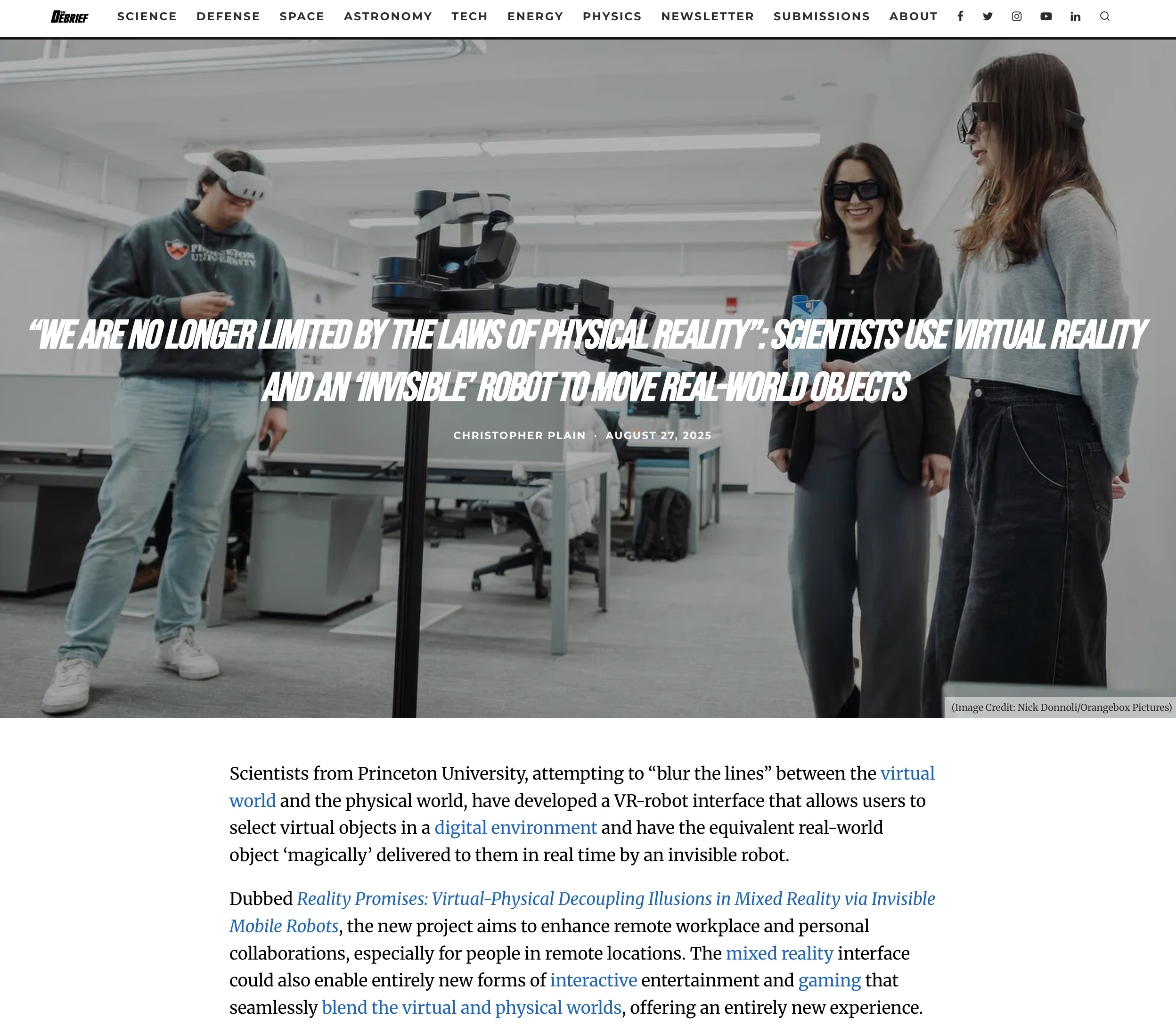

Princeton Engineering. Original Story. Erasing the seams between the virtual and physical worlds (by Julia Schwarz)

Hackster.io May the Mixed Reality Be with You (by Nick Bild)

Rocking Robots Robot laat virtuele en fysieke wereld samensmelten (by Pieter Werner)

AZo Robotics Princeton Scientists Use 'Invisible' Robots to Bring Virtual Objects Into the Real World (by Soham Nandi)

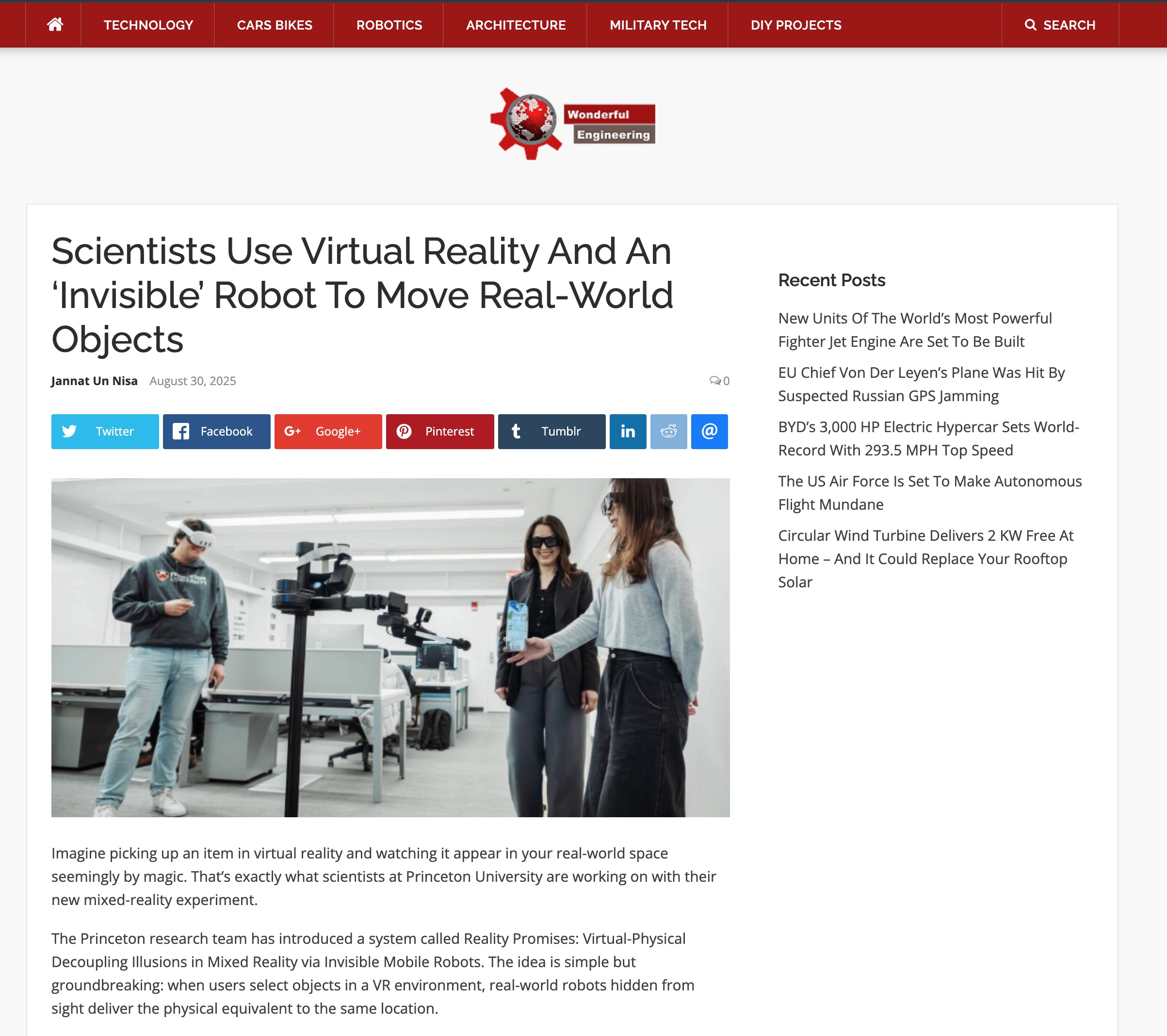

Wonderful Engineering. Scientists Use Virtual Reality And An 'Invisible' Robot To Move Real-World Objects (by Jannat Un Nisa)

TechXplore. Virtual reality merges with robotics to create seamless physical interactions.

DigiTrendz. Princeton's Invisible Robots Deliver in VR & AR.

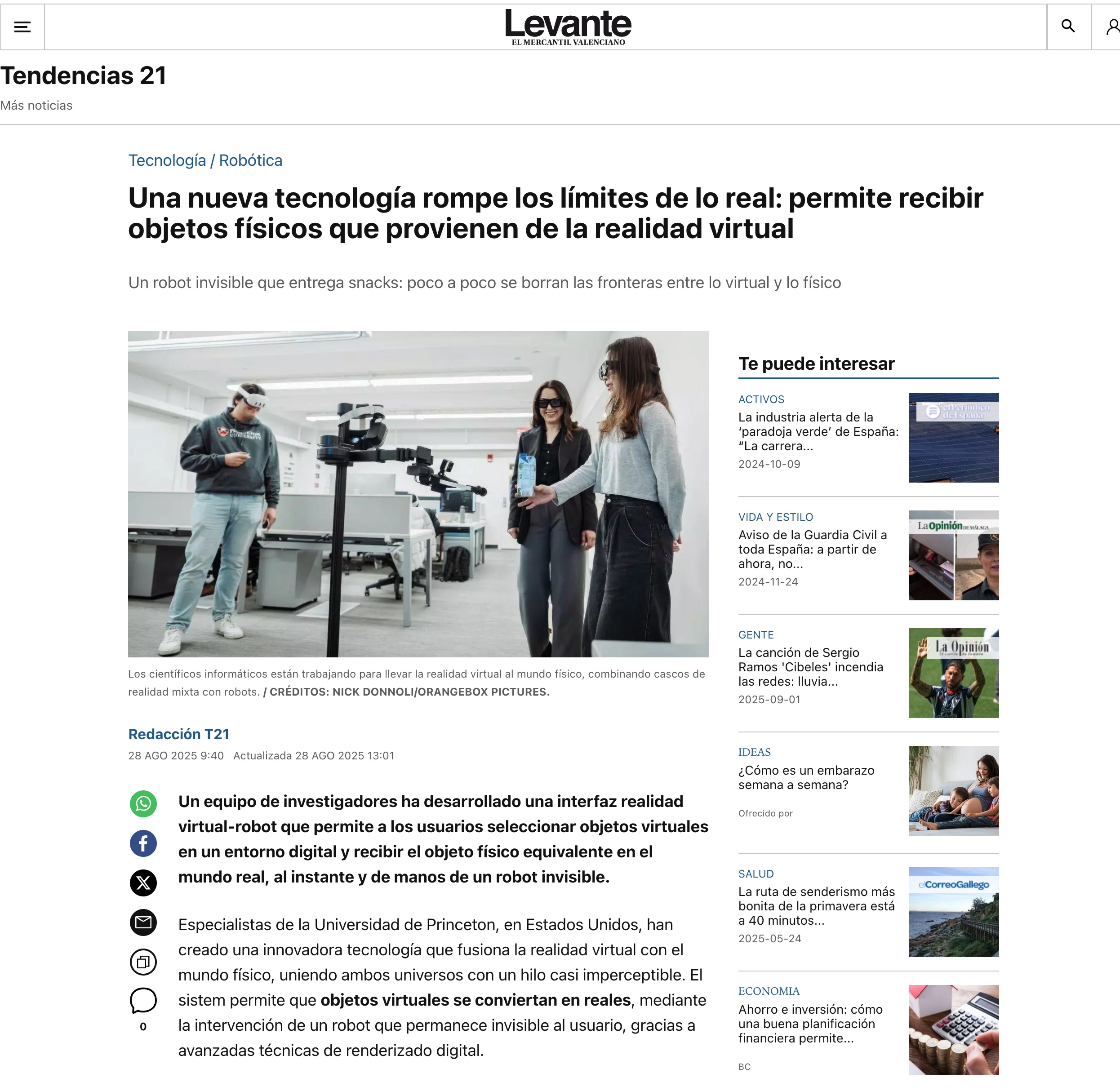

Levante. El Mercantil Valenciano. Una nueva tecnología rompe los límites de lo real: permite recibir objetos físicos que provienen de la realidad virtual.

ScienMag Blurring the Boundaries Between Virtual and Physical Worlds

International Society for Presence Research Princeton researchers use invisible robots to erase the seams between the virtual and physical worlds

Realité Virtuelle Ces chercheurs créent une techno si folle qu'elle rend les robots… invisibles (Alternate)

Dissertation

Computers moved from the basement onto the user's desk into their pocket and around their wrist. Not only did they physically converge to the user's space of action, but sensors such as CMOS, GPS, NFC, and LiDAR also began to provide access to the environment, thus situating them in the user's direct reality. However, the user and their computer exhibit fundamentally different perceptual processes, resulting in substantially different internal representations of reality.

Augmented and Mixed Reality systems, as the most advanced situated computing devices of today, are mostly centered around geometric representations of the world around them, such as planes, point clouds, or meshes. This way of thinking has proven useful in tackling classical problems including tracking or visual coherence. However, little attention has been directed toward a semantic and user-oriented understanding of the world and the specific situational parameters a user is facing. This is despite the fact that humans not only characterize their environment by geometries, but they also consider objects, the relationships between objects, their meanings, and the affordance of objects. Furthermore, humans observe other humans, how they interact with objects and other people, all together stimulating intent, desire, and behavior. The resulting gap between the human-perceived and the machine-perceived world impedes the computer's potential to seamlessly integrate with the user's reality. Instead, the computer continues to exist as a separate device, in its own reality, reliant on user input to align its functionality to the user's objectives.

This dissertation on Situated and Semantics-Aware Mixed Reality aims to get closer to Augmented and Mixed Reality experiences, applications, and interactions that address this gap and seamlessly blend with a user's space and mind. It aims to contribute to the integration of interactions and experiences with the physicality of the world based on creating an understanding of both the user and their environment in dynamic situations across space and time.

Method-wise, the research presented in this dissertation is concerned with the design, implementation, and evaluation of novel, distributed, semantics-aware, spatial, interactive systems, and, to this end, the application of state-of-the-art computer vision for scene reconstruction and understanding, together enabling Situated and Semantics-Aware Mixed Reality.

Blog and Projects

Find my blog on computing and ML infrastucture and other stuff here.

Projects

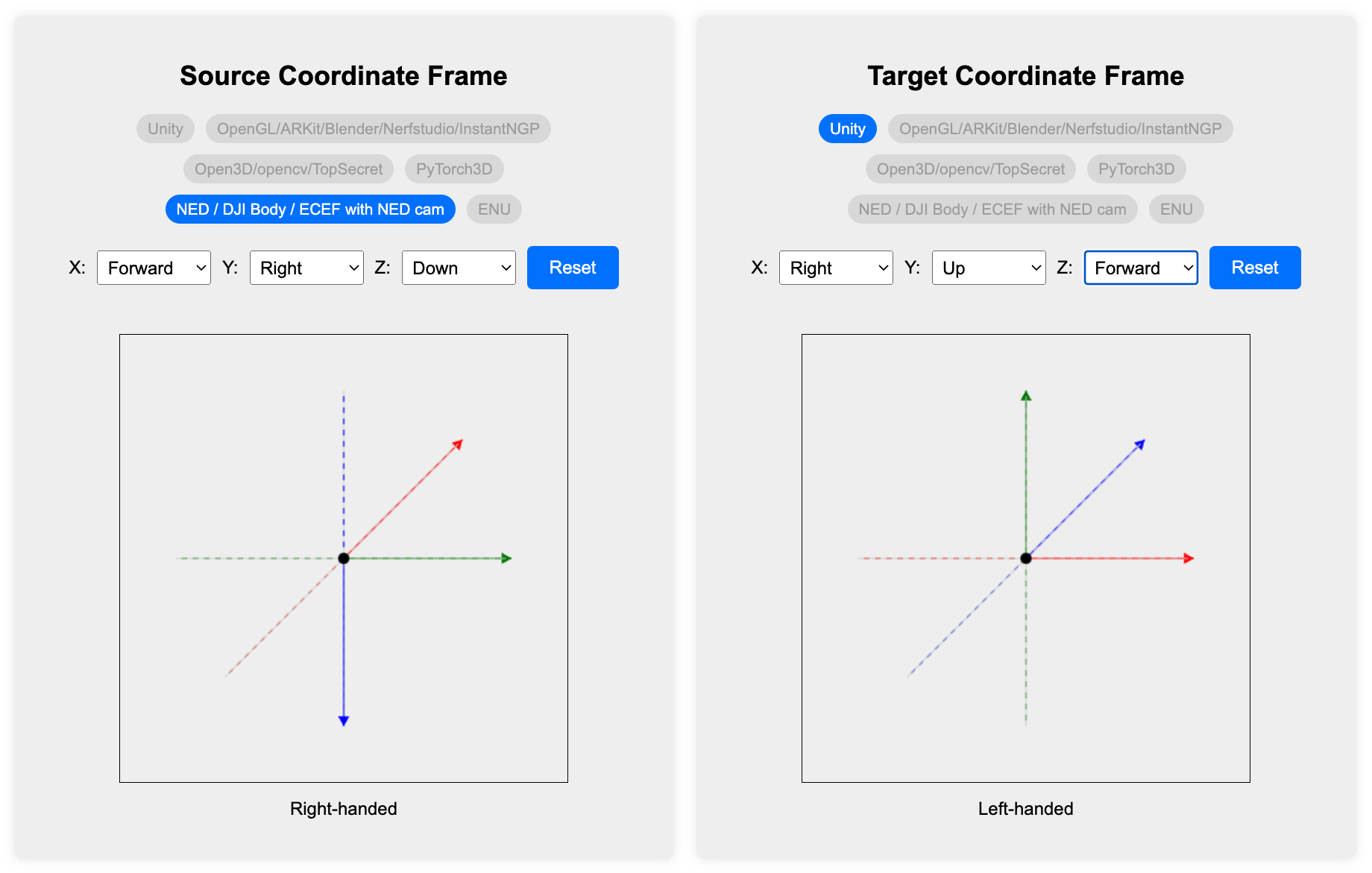

3D Coordinate Converter

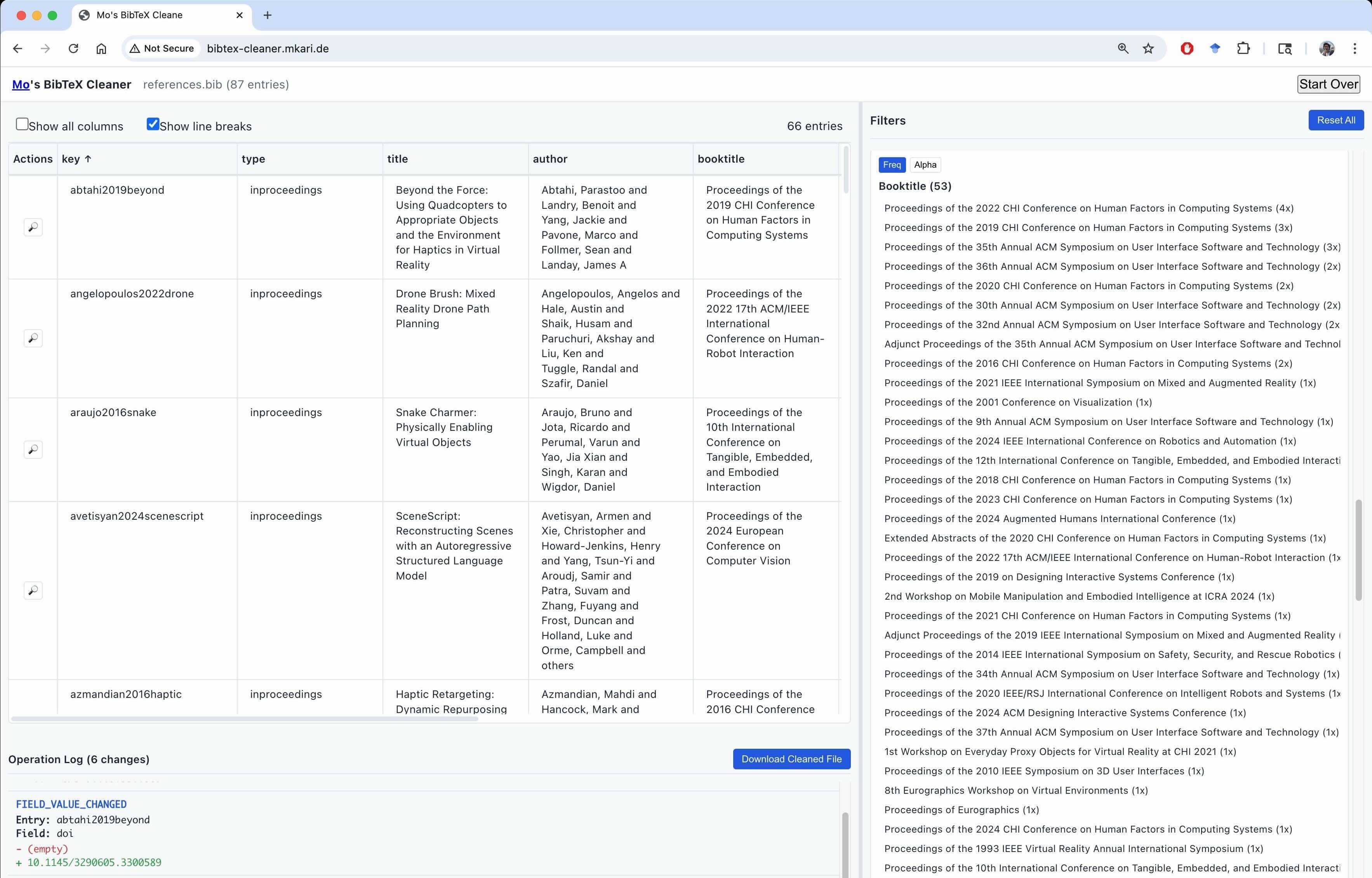

BibTeX Cleaner

Blog Posts

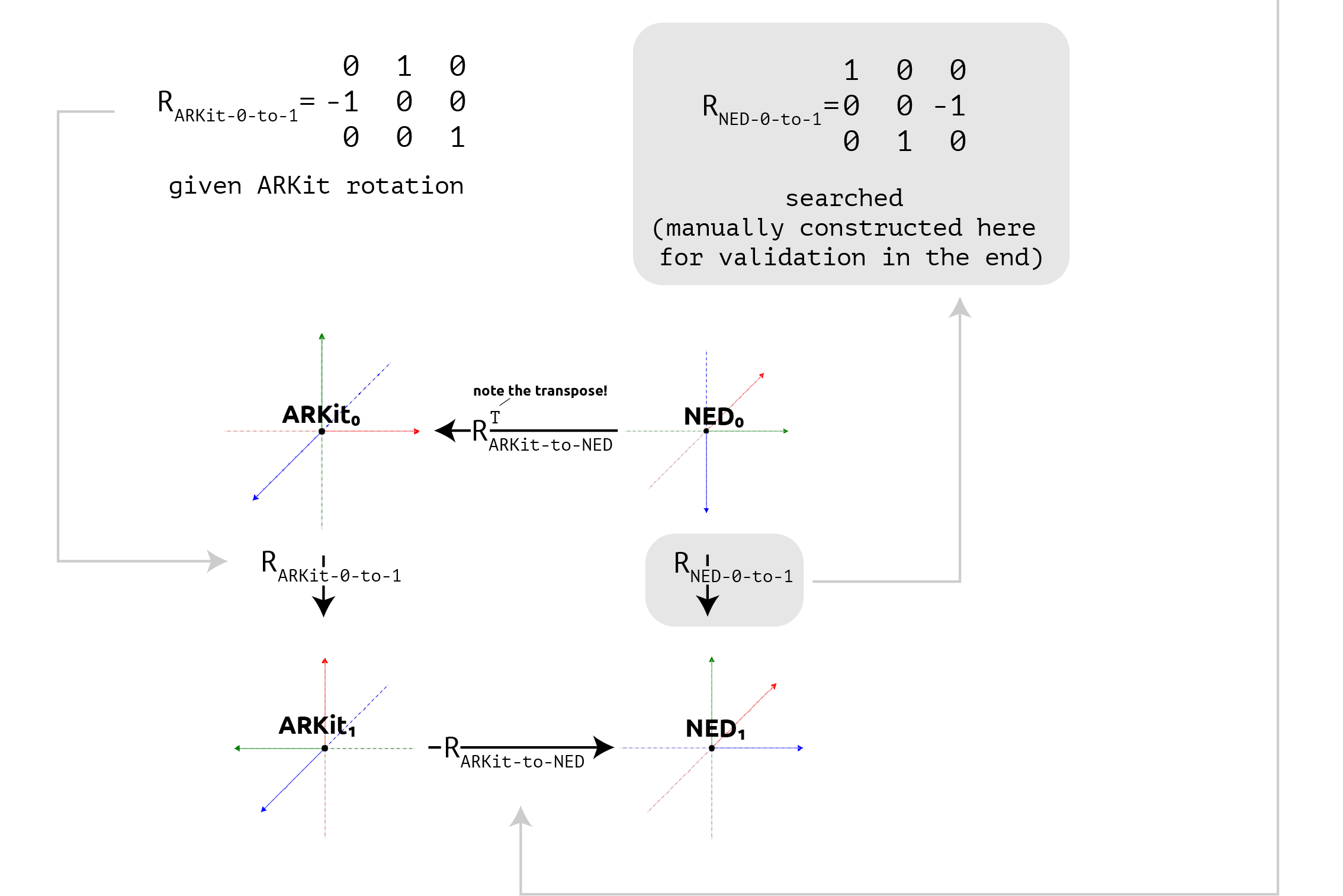

Camera Conventions, Transforms, and Conversions. Jun 22, 2024.

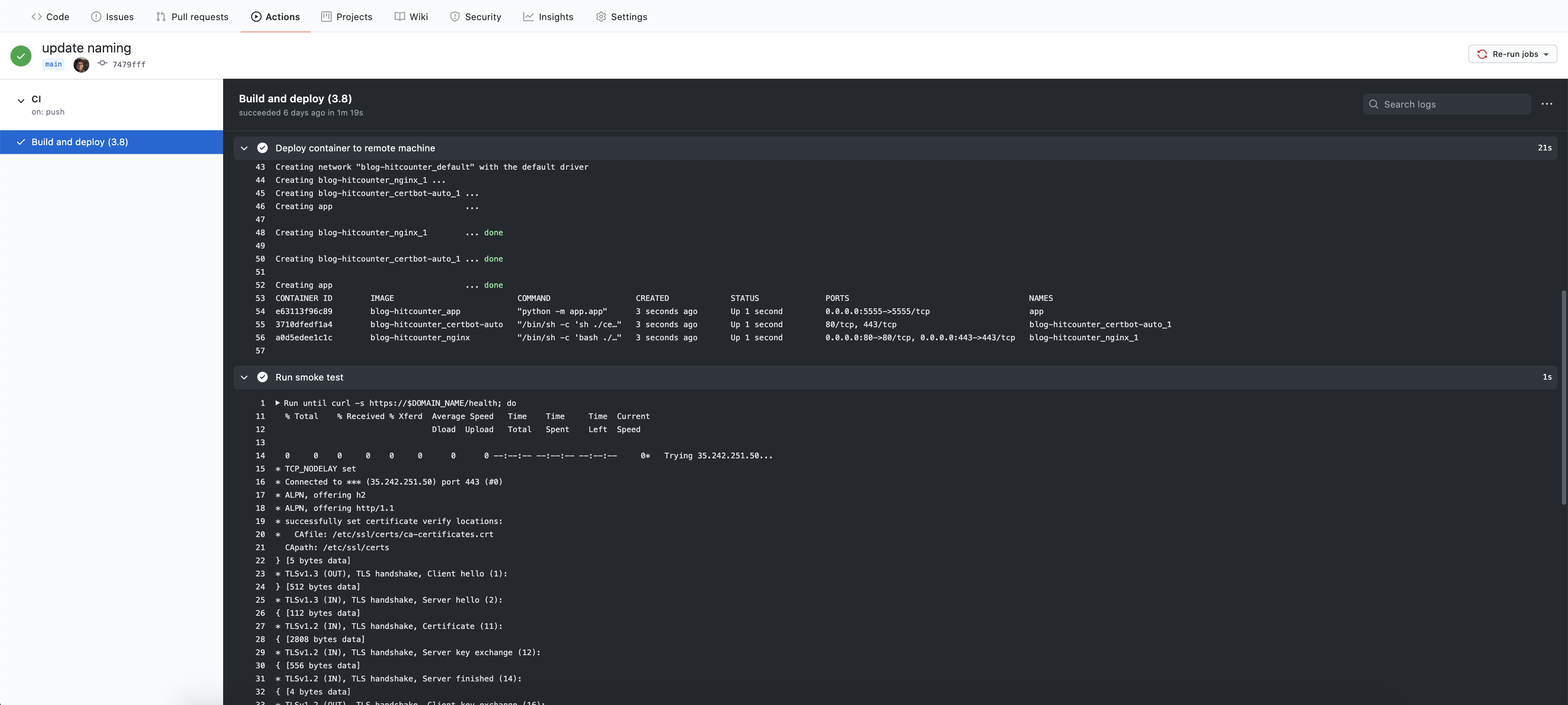

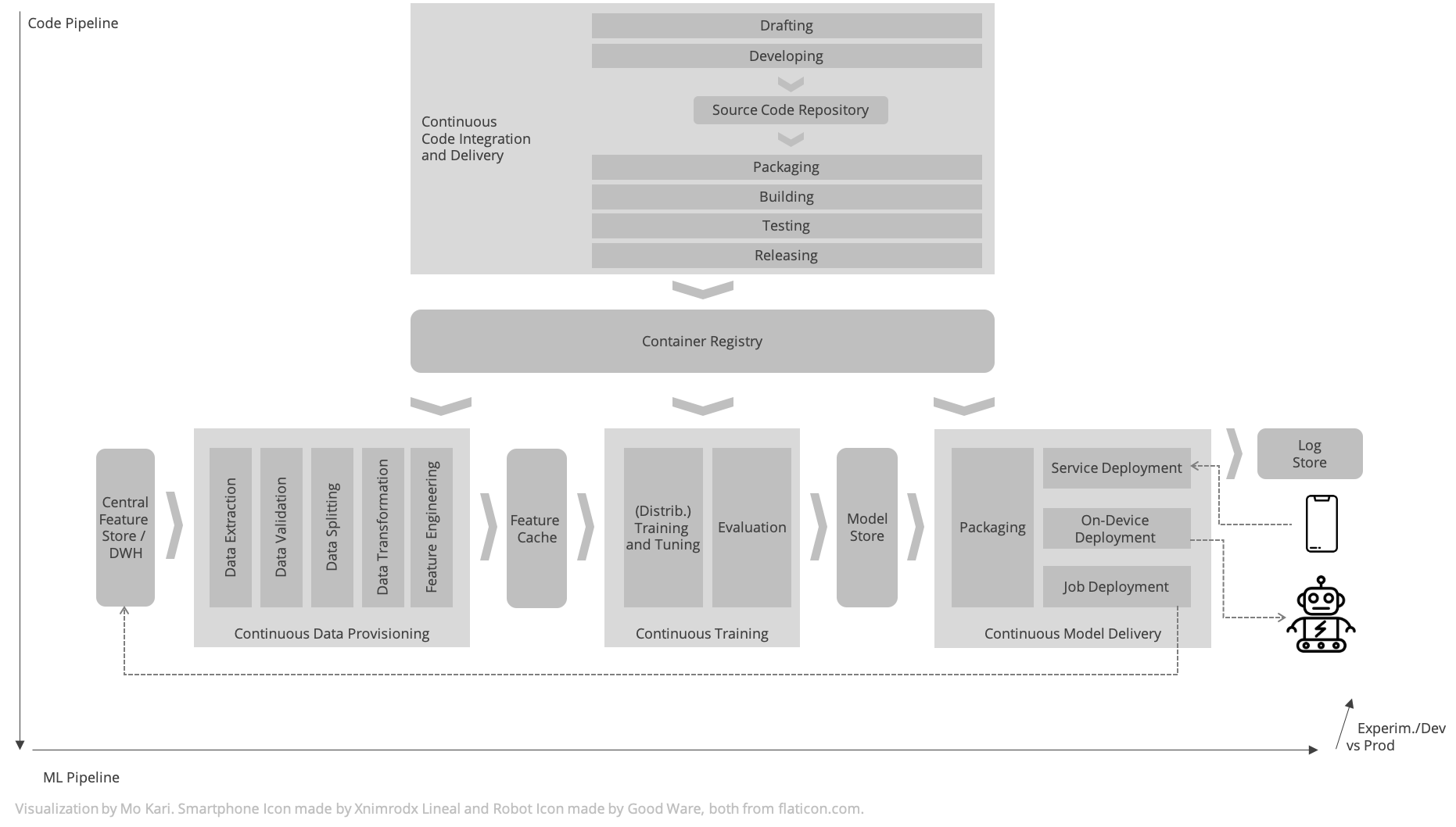

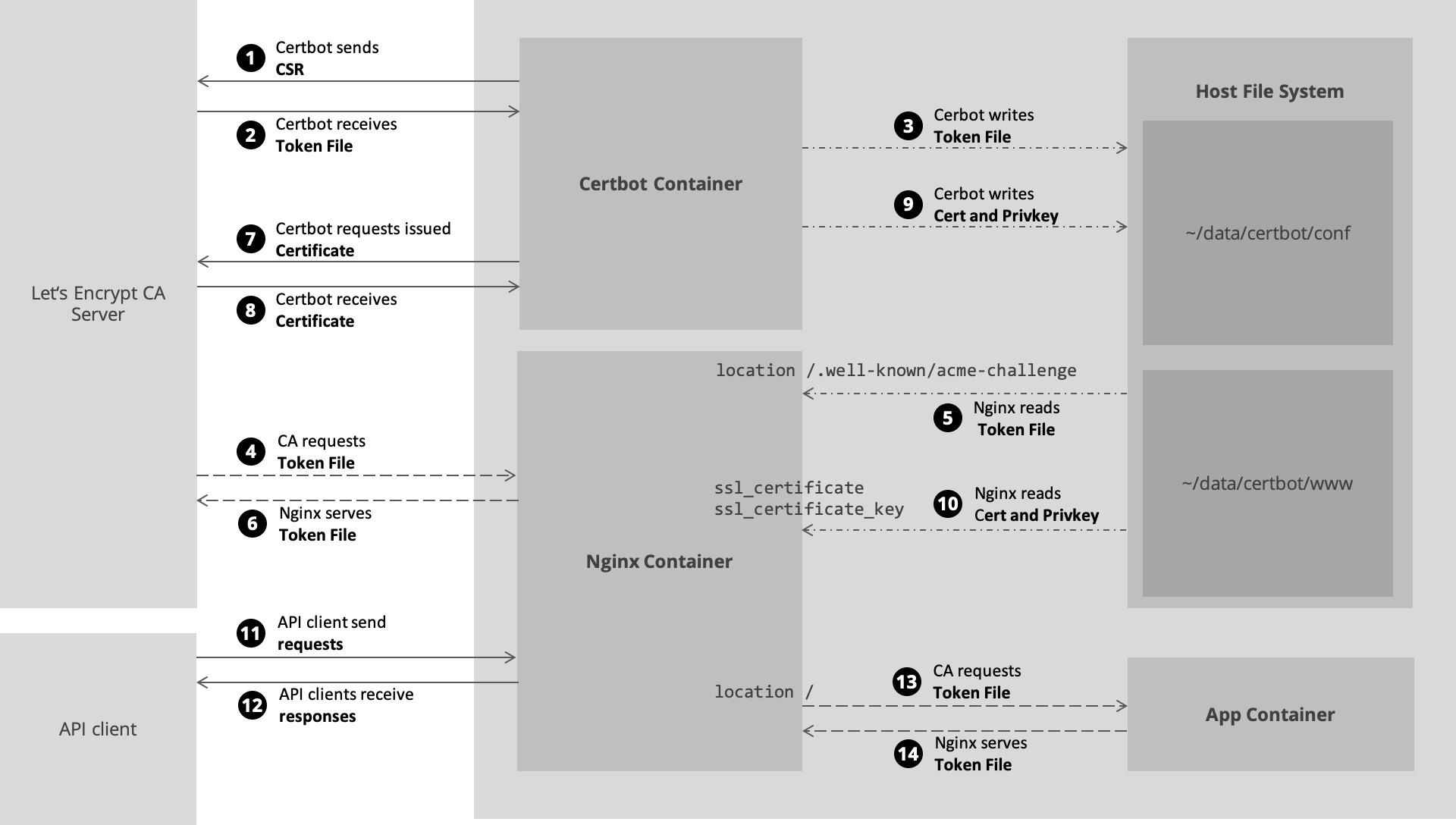

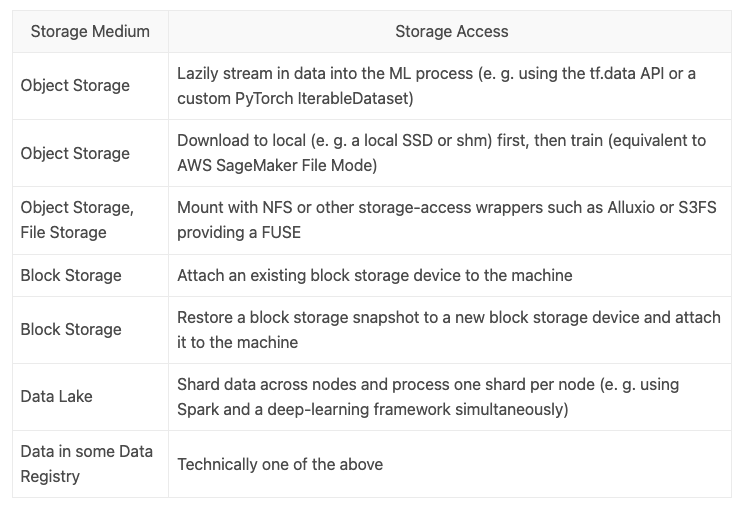

Primitives of MLOps Infrastructure. Nov 23, 2020.

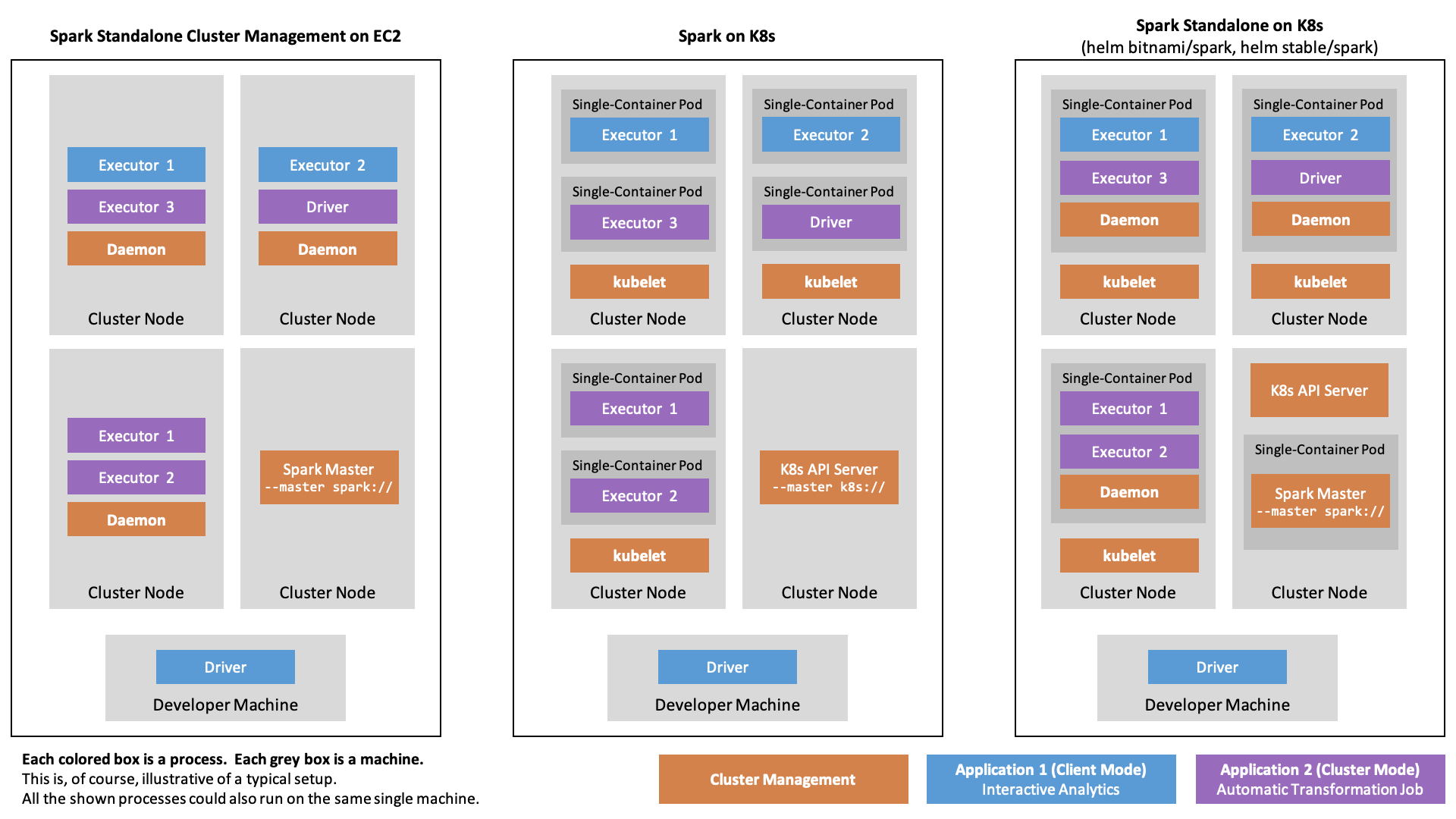

Native Spark on Kubernetes. Oct 6, 2020.

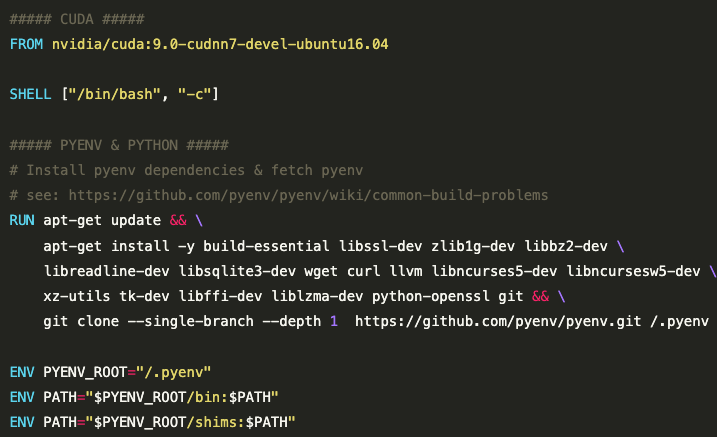

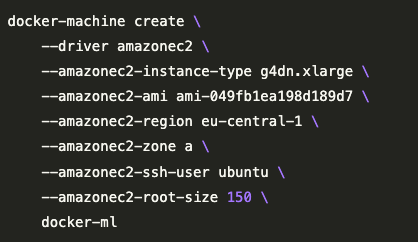

Reproducible ML Models using Docker. Jul 5, 2020.

Website-only Projects

PhD Top 3 Experiences

Top 3: Conference Talks

Top 3: Awesome Offices

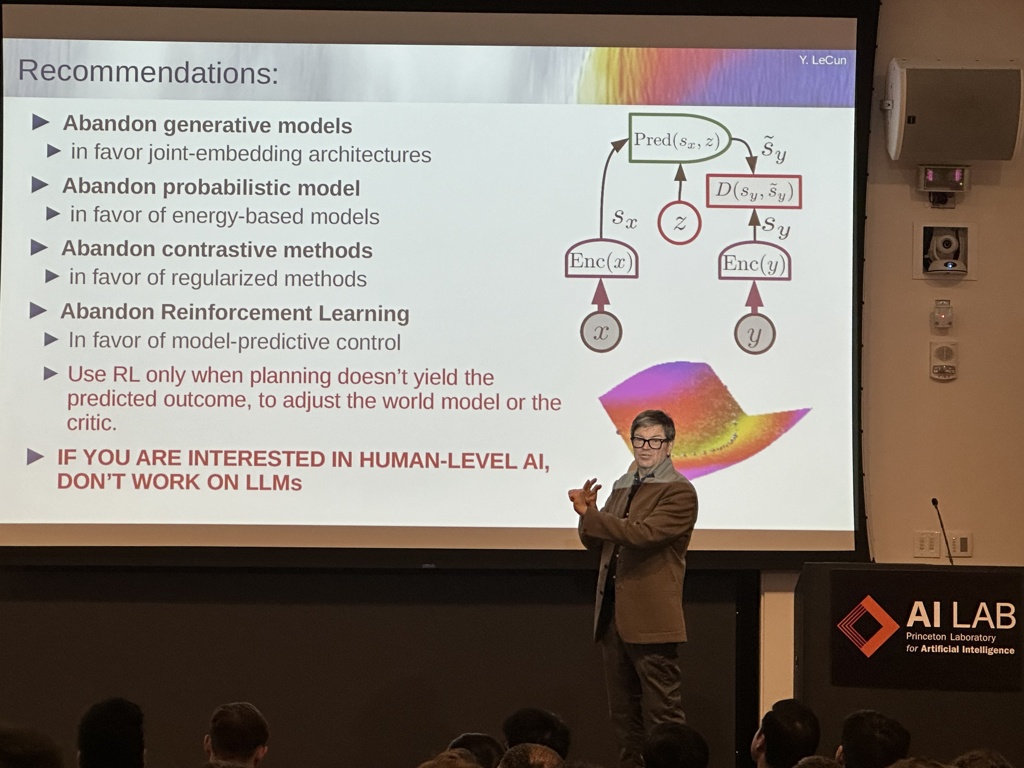

Top 3: Technology Pioneer Encounters (aka: Always chase meeting & talking to your heroes!)

Top 3: Porsches

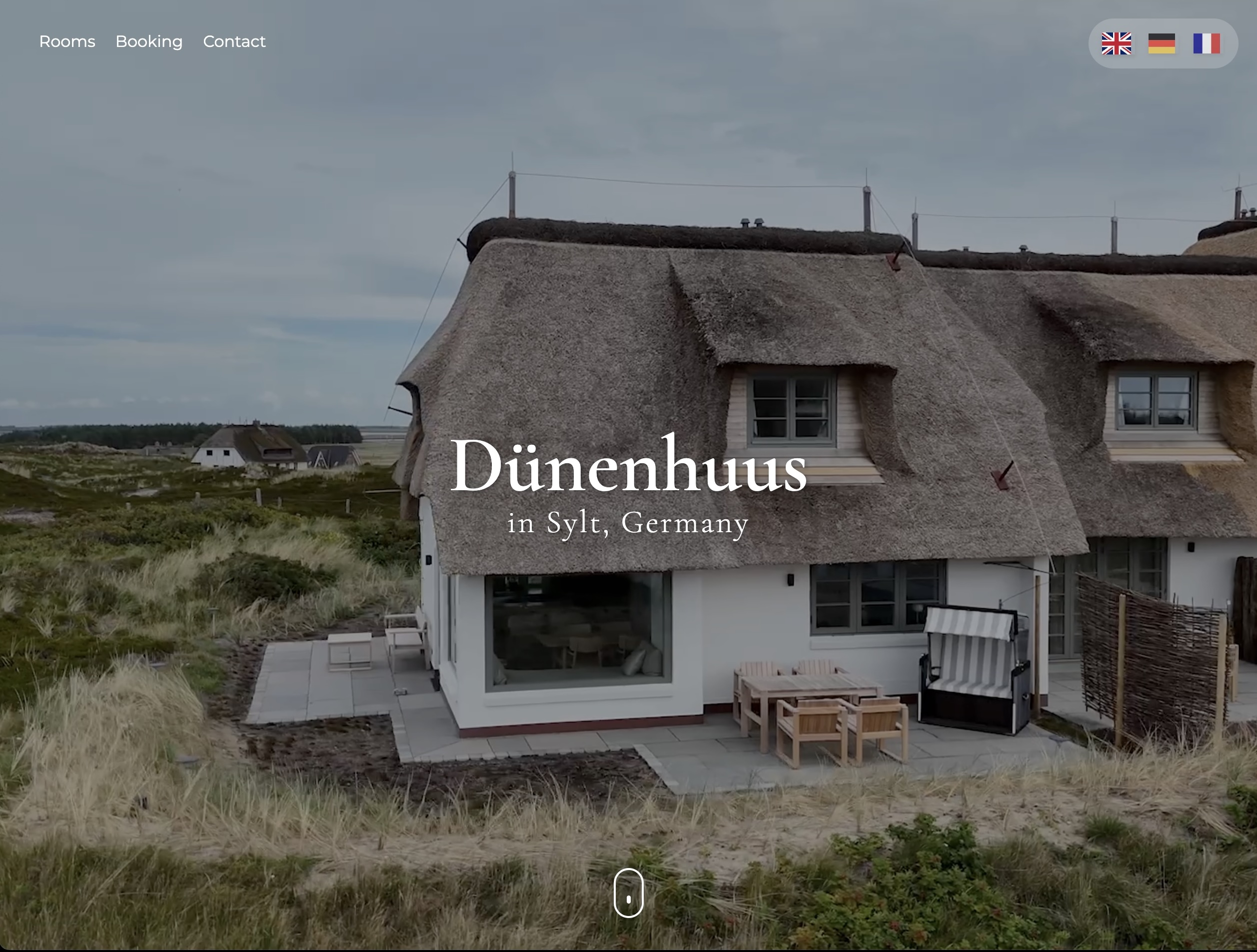

Top 3: Retreats

Top 3: Moments in the Porsche PhD Community

Top 3: Lunch & Dinner Routines

Top 3: Team Building Exercises

Top 3: Mentors

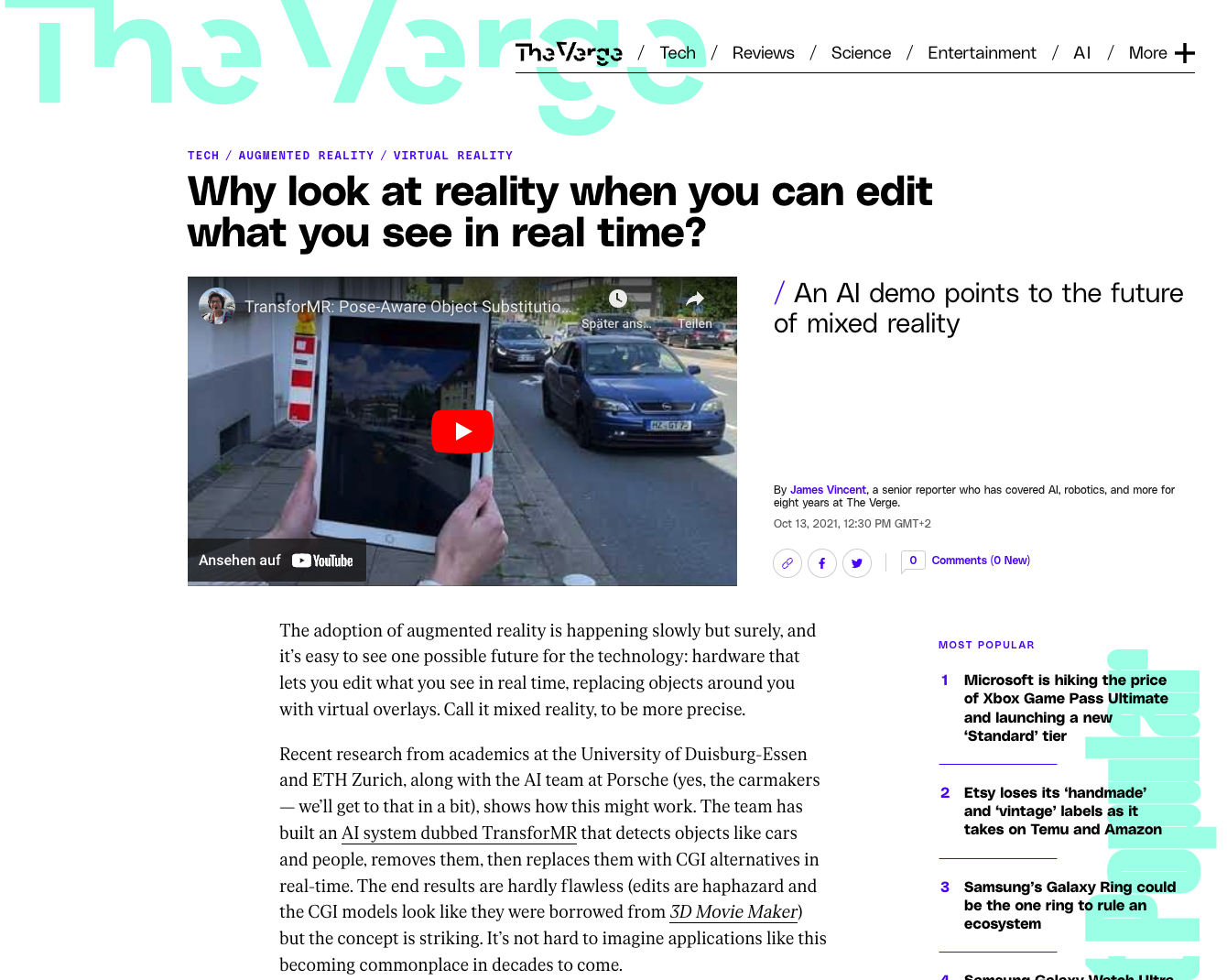

Top 3: Press Reports

Top 3: Interactions with the University President (Prof. Dr. Ulrich Radtke)

Top 5: Trajectory Changers

In spaceflight, planets can provide gravity assists to a spacecraft in order to alter its path. I was lucky enough to encounter, orbit, and swing-by multiple extraordinary individuals that changed my PhD (and life) trajectory.

Top 3: Learnings on research and science

Scientists are story tellers. For a long time, I thought scienstists say what is (re-contextualizing Augstein) and the scientific method produces the output logically determined like a mechanical system. However, turns out the scientific method is a only tool to figure out a story as it unfolds. Still, the scientist needs to tell it. At least, the impactful scientist.

In fact, I think every impactful individual is a story teller. Only by telling a story do you convince reviewers, students, supervisors, and job candidates. And, most importantly, convince yourself.

Of course, there are differences between scientific stories and other stories. Why do you tell a story? How do you tell a story? To whom do you tell a story? How convincing is your story? How much does your story correspond with reality, how plausible is it, and how well is it grounded in scientifically tenable statements? I'd say, scientists are some of the best story tellers in the world as they tell a previously untold story. An optimistic story. As stringent as it gets. An easy-to-follow story for an often highly complex, non-fictional plot.

Thoughts inspired from discussions with Reinhard Schütte, Tobias Grosse-Puppendahl, and Bettina Luescher.

Science is the endeavour of solving difficult problems under uncertainty. These difficult problems are generally rooted in the lack of non-trivial knowledge.

That's why great science is closely related to great enterpreneurship. Both activities share that they are predominantly characterized by uncertainty. Sometimes, the whole problem and its boundaries are uncertain. Sometimes, the problem determinants are uncertain. And sometimes, "only" the solution to an already well-structured problem is uncertain.

Therefore, conducting research is mostly the process of eliminating uncertainties, e.g., through implementation, observation, or reasoning.

Thoughts inspired from discussions with Reinhard Schütte and Andreas Fender.

Individuals make the difference. Institutions have no talent, knowledge, skill, experience, or expertise. Individuals do.

Of course, institutions are better or worse in their tendency of attracting individuals with talent, etc. But talented people can be where you don't expect them and vice versa. Therefore, you need to be on the constant lookout for talent.

In science-and any creative role really, this specifically means if there is a great outcome, it is not due to the institution. It is because of one good individual. And, maybe they were renting out DVDs in the video rental store, only to write and direct Pulp Fiction a few years later.

Sometimes, one might think there are whole teams or departments that created something. But there is no next-level support when you are already at the edge. Nope, in fact, the better the outcome, the lower the number of people that are behind it. Either you solve whatever problem, or it remains a problem. Either you are the expert, or no one is. Either you develop the skill, or no one has it. Either you do it, or no one does.

PS: Especially in larger organizations, there is the tendency to develop the believe that good processes can replace good individuals. That's a fallacy. (I've never heard anyone talk of Agile Development in any good software company. Just get your code into CI.)

Thoughts inspired from discussions with Reinhard Schütte, Christian Holz, and Daniel Cremers.

Podcasts

Camera Work

Aerial Videography

Travel Photography

Please see my travel photography website for more photos.